Wikipedia Network Maps

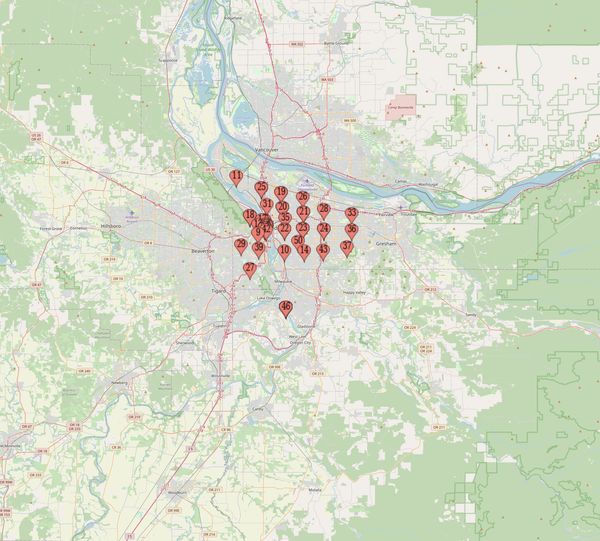

I have been working on a really exciting project with Ian Pearce and Max Darham that attempts to visualize Wikipedia.

Our idea was as such:

How are articles on Wikipedia organically organized? For instance, if you read the article on Bicycles, there will likely be links to the inventor of the modern bicycle within the text of the article, and there will likely be links to rubber, steel, and all the other parts; the countries that were important in making advances in bicycle technology, and so forth. If we treat an article as a vertex and the link as a line or connection to another article or vertex, could we make a program that visually maps this data? We basically wanted to make a prototype datamine project and then use our information to draw specific conclusions about how Wikipedia looks, works, is organized, and if that bears any similarities to any other known information networks maps or types.

Our plan was as such:

We wanted to build a ruby program that visited a random article. You can do this for the English language by using http://en.wikipedia.org/wiki/Special:Random.

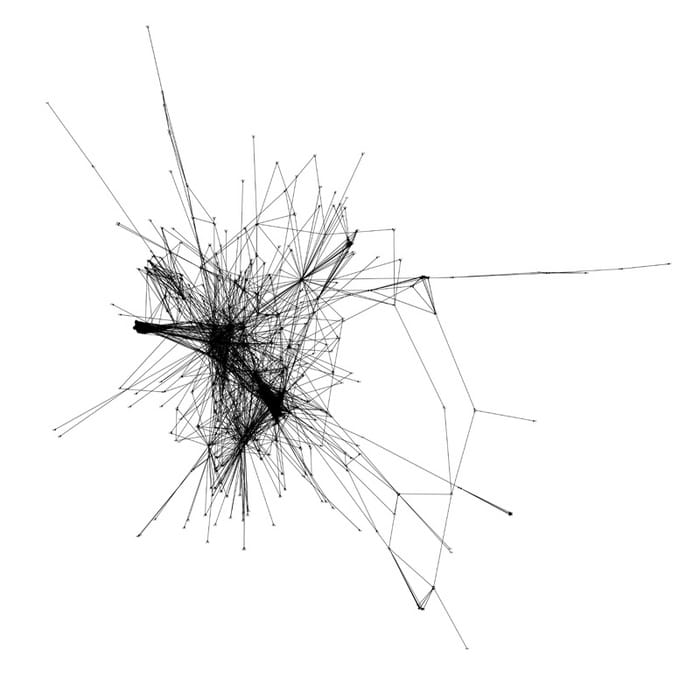

Then, we wanted to have the program store all the links from the page, remember the page and all of its links, then recurse through and look at all the links that were on that page, then go through those linked pages, and so forth until we had a huge array of all the articles and the articles that were linked to within a given article. Then, we wrote a little method that turned that information into a Python list, and then used Sage to create visuals of the data. Below are a few examples as of right now of a few graphs we have generated so far; these are the largest connect components, or the largest group of connected articles; there are others, but they don’t link to anything, and nothing links to them, so they are kind of boring. Check out below for some visualizations of the data as well as large cuts of code used to make these work!

View post on imgur.com

Embedded JavaScript

Actual Sage Code Used to make it all work:

#NOTE: the following language sets are available on my server: als ang bar ga ia kg li nov nrm pdc qu sco vls war

g = eval(open(get_remote_file('http://devingaffney.com/files/wiki_data

/kg/kg_data.txt')).read())

wiki_graph = Graph(g)

wiki_digraph = DiGraph(g)

wg_conncomp = wiki_graph.connected_components_subgraphs()

wdg_conncomp = wiki_digraph.connected_components_subgraphs()

print ""

print "GRAPH"

print "Total articles:", wiki_graph.num_verts()

dh = wiki_graph.degree_histogram()

dh_plot = list_plot(dh, plotjoined=True)

gcount = 0

print "Degree histogram:"

for x in dh:

gcount = gcount+1

if x != 0:

print gcount, x

dh_plot.axes_range(0,200,0,60)

dh_plot.show()

graph_degree_total = 0

for deg in wiki_graph.degree_iterator():

graph_degree_total = deg+graph_degree_total

graph_average_degree = float(graph_degree_total/wiki_graph.num_verts())

print ""

print "GRAPH : LARGEST CONNECTED COMPONENT"

print "Total articles in largest connected component:", wg_conncomp[0].num_verts()

print "Diameter of largest connected component:", wg_conncomp[0].diameter()

array = []

counter = 0

for x in wg_conncomp[0].vertices():

for y in wg_conncomp[0].vertices():

length = wg_conncomp[0].shortest_path_length(x,y)

array.append(length)

for x in array:

counter = x+counter

average_path_length = float(counter/len(array))

print "Average path length:", average_path_length

print "Clustering Average:", wg_conncomp[0].clustering_average()

print "Degree Total:", graph_average_degree

print "Number of Cliques", wiki_graph.clique_number()

wg_conncomp[0].show(vertex_size=2, fontsize=2, figsize=[75,75],

filename='wikipedia_crcl.png', layout="circular")

print ""

print "DIGRAPH"

dh = wiki_digraph.degree_histogram()

dh_plot = list_plot(dh, plotjoined=True)

dgcount = 0

print "Degree histogram:"

for x in dh:

dgcount = dgcount+1

if x != 0:

print dgcount, x

digraph_degree_total = 0

for deg in wiki_digraph.out_degree_iterator():

digraph_degree_total = deg+digraph_degree_total

digraph_average_degree = float(digraph_degree_total/wiki_digraph.num_verts())

dh_plot.axes_range(0,200,0,60)

dh_plot.show()

print ""

print "DIGRAPH : LARGEST CONNECTED COMPONENT"

print "Total articles in largest connected component:", wdg_conncomp[0].num_verts()

print "Clustering Average:", wdg_conncomp[0].clustering_average()

print "Degree Total:", digraph_average_degree

wdg_conncomp[0].show(vertex_size=2, fontsize=2, figsize=[75,75],

filename='digraph_wikipedia.png')

And the ruby libraries associated with originally collecting the datasets provided above:

class Wikipedia

attr_accessor :articles, :articles_by_hash

def initialize()

@articles = []

@articles_by_hash = []

end

def make_article_object(article_array)

if !@articles_by_hash.include?(article_array[0].hash) # If the article is being encountered

@articles_by_hash << article_array[0].hash # for the first time, then add its hash

new_article = Article.new(article_array[0]) # to the list of hashes and make a new

new_article.analyzed = true # Article => new_article.

links_array = article_array[1] # For the article's links, make an array

links_array.each do |linked_article| # and for each link => linked_article:

if !@articles_by_hash.include? linked_article.hash # if that link is being encountered for

@articles_by_hash << linked_article.hash # the first time, add its hash, make a

new_linked_article = Article.new(linked_article) # new Article => new_linked_article, add

@articles << new_linked_article # it to the Wiki's @articles, and add it

new_article.links << new_linked_article # to the new_article's @links

else

@articles.each do |article|

if article.hash_number == linked_article.hash

new_article.links << article

break

end

end

end

end

new_article.analyzed = true

@articles << new_article

else

@articles.each do |article|

if (article.hash_number == article_array[0].hash) and !article.analyzed

links_array = article_array[1]

links_array.each do |linked_article|

if !@articles_by_hash.include? linked_article.hash

@articles_by_hash << linked_article.hash

new_linked_article = Article.new(linked_article)

@articles << new_linked_article

article.links << new_linked_article

else

@articles.each do |existing_article|

if existing_article.hash_number == linked_article.hash

article.links << existing_article

break

end

end

end

end

article.analyzed = true

end

end

end

end

def print_for_sage_string

graph_string = "{"

@articles.each do |article|

graph_string = graph_string + article.hash_number.to_s + ": ["

article.links.each do |article_link|

graph_string = graph_string + article_link.hash_number.to_s + ", "

end

graph_string = graph_string + "], "

end

graph_string = graph_string + "}"

graph_string = graph_string.gsub(", ]", "]")

graph_string = graph_string.gsub(", }", "}")

print "\n"

print graph_string

print "\n\n"

end

def print_for_sage

print "{"

@articles.each do |article|

print article.hash_number.to_s + ": ["

article.links.each do |article_link|

print article_link.hash_number.to_s + ", "

end

print "], "

end

print "}\n\n"

end

def print_list

@articles.each do |article|

print "\n" + " => [" + article.name + "] >> "

if article.links.length == 0

print "nothing"

end

article.links.each do |article_link|

print "[" + article_link.name + "], "

end

end

print "\n\n"

end

def hash_reformat

i = 0

@articles.each do |article|

article.hash_number = i

i = i + 1

break if i == @articles.length

end

end

def find_title_by_hash(int) #find_title_by_hash(6), not ("6")

@articles.each do |article|

if article.hash_number == int

return article.name

break

end

end

end

def find_hash_by_title(article_name)

article_name.downcase!

salsa_verde = 0

@articles.each do |article|

if article.name.downcase.include?(article_name)

print article.name + ": " + article.hash_number.to_s + "\n"

end

salsa_verde = salsa_verde+1

if salsa_verde == @articles.length

print "Went through all articles.\n"

break

end

end

end

def label_for_sage

print "def label_wiki(wiki_graph):\n"

@articles.each do |article|

print " wiki_graph.set_vertex(" + article.hash_number.to_s + ", \"" + article.name + "\")\n"

end

end

end

require 'rubygems'

require 'hpricot'

require 'open-uri'

require 'cgi'

class LinkGrabz

attr_accessor :body, :link_array, :visited, :title, :url, :language_prefix, :url_prefix

def initialize(language_prefix)

@language_prefix = language_prefix

@url_prefix = "http://localhost/~ian/wikipedia/" + @language_prefix + "/articles"

@body = ""

@link_array = []

@url = ""

end

def special_grabber

html = Hpricot(open("http://"+@language_prefix+".wikipedia.org/wiki/Special:Random"))

if !html.at('//div[@class="printfooter"]').nil? and !html.at('//div[@class="printfooter"]').children.nil?

article_name = html.at('//div[@class="printfooter"]').children.select{|e| e}

article_name = article_name[1].inner_html

elsif !html.at('//title[@=empty()]').nil? and !html.at('//title[@=empty()]').children.nil?

article_name = html.at('//title[@=empty()]').children.select{|e| e}

article_name = article_name[1].inner_html

else

#special_grabber

break

end

article_name = article_name.gsub("http://"+@language_prefix+".wikipedia.org/wiki/","")

if article_name.length > 9

if article_name[0,1].include?("%")

if article_name[3,1].include?("%")

if article_name[6,1].include?("%")

grablink(@url_prefix + "/" + CGI::unescape(article_name[0,3]) + "/" + CGI::unescape(article_name[3,3]) + "/" + CGI::unescape(article_name[6,3]) + "/" + article_name + ".html")

else grablink(@url_prefix + "/" + CGI::unescape(article_name[0,3]) + "/" + CGI::unescape(article_name[3,3]) + "/" + article_name[4,1].downcase + "/" + article_name + ".html")

end

elsif article_name[4,1]

grablink(@url_prefix + "/" + article_name[0,1].downcase + "/" + article_name[3,1].downcase + "/" + CGI::unescape(article_name[4,3]) + "/" + article_name + ".html")

else grablink(@url_prefix + "/" + CGI::unescape(article_name[0,3]) + "/" + article_name[3,1].downcase + "/" + article_name[4,1].downcase + "/" + article_name + ".html")

end

elsif article_name[1,1].include?("%")

if article_name[4,1].include?("%")

grablink(@url_prefix + "/" + article_name[0,1].downcase + "/" + CGI::unescape(article_name[1,3]) + "/" + CGI::unescape(article_name[4,3]) + "/" + article_name + ".html")

else grablink(@url_prefix + "/" + article_name[0,1].downcase + "/" + CGI::unescape(article_name[1,3]) + "/" + article_name[4,1].downcase + "/" + article_name + ".html")

end

elsif article_name[2,1].include?("%")

grablink(@url_prefix + "/" + article_name[0,1].downcase + "/" + article_name[1,1].downcase + "/" + CGI::unescape(article_name[3,3]) + "/" + article_name + ".html")

else grablink(@url_prefix + "/" + article_name[0,1].downcase + "/" + article_name[1,1].downcase + "/" + article_name[2,1].downcase + "/" + article_name + ".html")

end

else

special_grabber

end

end

def grablink(url)

if !Hpricot(open(url)).nil?

html = Hpricot(open(url)) #open site

@body = html.search("//div[@id='content']")

@url = url

@title = @url.gsub(@url_prefix,"")

else

print "\nEncountered broken link.\n"

end

rescue OpenURI::HTTPError

special_grabber

rescue URI::InvalidURIError

special_grabber

end

def extract

@link_array.clear

(@body/"a[@href]").each do |url|

new_url = url.attributes['href'].match(/(\/.\/.\/.\/.*)/).to_s

if new_url.length != 0 and !new_url.include?("~") and !new_url.include?("#") and !new_url.include?("%7E")

@link_array << new_url

end

end

@link_array = @link_array.uniq

end

def export

return [@title, @link_array]

end

end

class Article

attr_accessor :name, :hash_number, :links, :analyzed

def initialize(name)

@name = name

@hash_number = name.hash

@links = []

@analyzed = false

end

end

@wiki = Wikipedia.new()

def run(language_prefix, article_number_limit, special_grabber_limit)

@test = LinkGrabz.new(language_prefix)

@stop = 0

while @wiki.articles.length < article_number_limit and @stop < special_grabber_limit

@test.special_grabber

if !@wiki.articles_by_hash.include? @test.title.hash

@stop = 0

@articles_to_be_analyzed = []

print "\nRandomly grabbed: " + @test.title

@test.extract

@wiki.make_article_object(@test.export)

print "\nExported to @wiki.\n\nSorting unanalyzed articles..."

@wiki.articles.each do |article|

if !article.analyzed

if article.links.length > 0

article.analyzed = true

else

@articles_to_be_analyzed << article

end

end

end

while @articles_to_be_analyzed.length > 0

i = 1

atba_length = @articles_to_be_analyzed.length.to_s

@articles_to_be_analyzed.each do |article|

@test.grablink(@test.url_prefix + article.name)

@test.extract

@wiki.make_article_object(@test.export)

article.analyzed = true

print "\nAnalyzed article " + i.to_s + " of " + atba_length + " unanalyzed articles: " + article.name

i += 1

end

old_atba = @articles_to_be_analyzed

@articles_to_be_analyzed = []

print "\n\nResorting unanalyzed articles..."

@wiki.articles.each do |article|

if !article.analyzed

if article.links.length > 0

article.analyzed = true

else

@articles_to_be_analyzed << article

end

end

end

break if old_atba == @articles_to_be_analyzed

print "\n\nTotal articles encountered: " + @wiki.articles.length.to_s + "\n"

end

else

print "Article already analyzed: " + @test.title

@stop += 1

end

print "\n\nTotal articles encountered: " + @wiki.articles.length.to_s + "\n"

end

@wiki.hash_reformat

end